Companion-based Information Kiosk

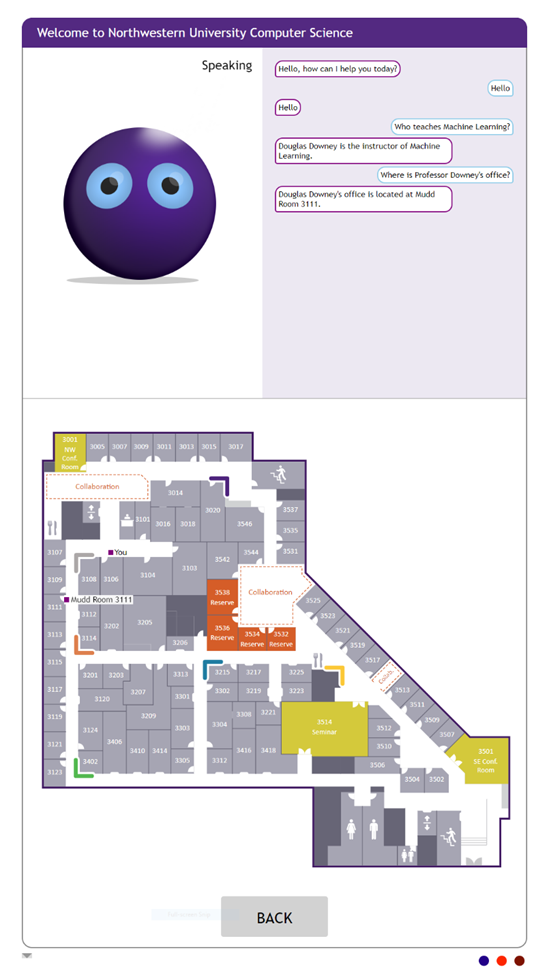

One of our long-term goals with the Companion cognitive architecture is to create systems that people treat as collaborators rather than tools, interacting with them over long periods via natural modalities, with the software incrementally adapting to the people it works with. As an initial step to experiment with the capabilities required, we have deployed a multimodal information kiosk in the reception area for the Computer Science Department at Northwestern University. Except for the pandemic, the Kiosk has been operating almost continuously since September 2018.

The Kiosk is operated by a Companion, with a Kinect camera and microphone, with a touch screen for display and interaction when the environment is noisy. The touch Vision and speech processing are handled by a specialized Companion agent that incorporates Microsoft Research's Platform for Situated Intelligence. The vision system is used to determine whether what it hears is directed at the kiosk, versus another person, with off-the-shelf speech recognition used to construct text when speech is directed at the Kiosk. (In compliance with Illinois Biometric laws, the Kiosk does not attempt to recognize people by sight or by voice, nor is such data stored.) This was the first deployed system outside of Microsoft using the platform.

Natural language dialogue consist of a two layer process. For highly specialized queries, e.g. Where is the bathroom?

or When is the next shuttle?

, simple string processing is used. All other queries are handled by the Companion Interaction Manager agent, using its general purpose natural language system augmented with analogical Q/A training, to construct answers as needed based on information about people, classes, topics, and events in the Companion's knowledge base.

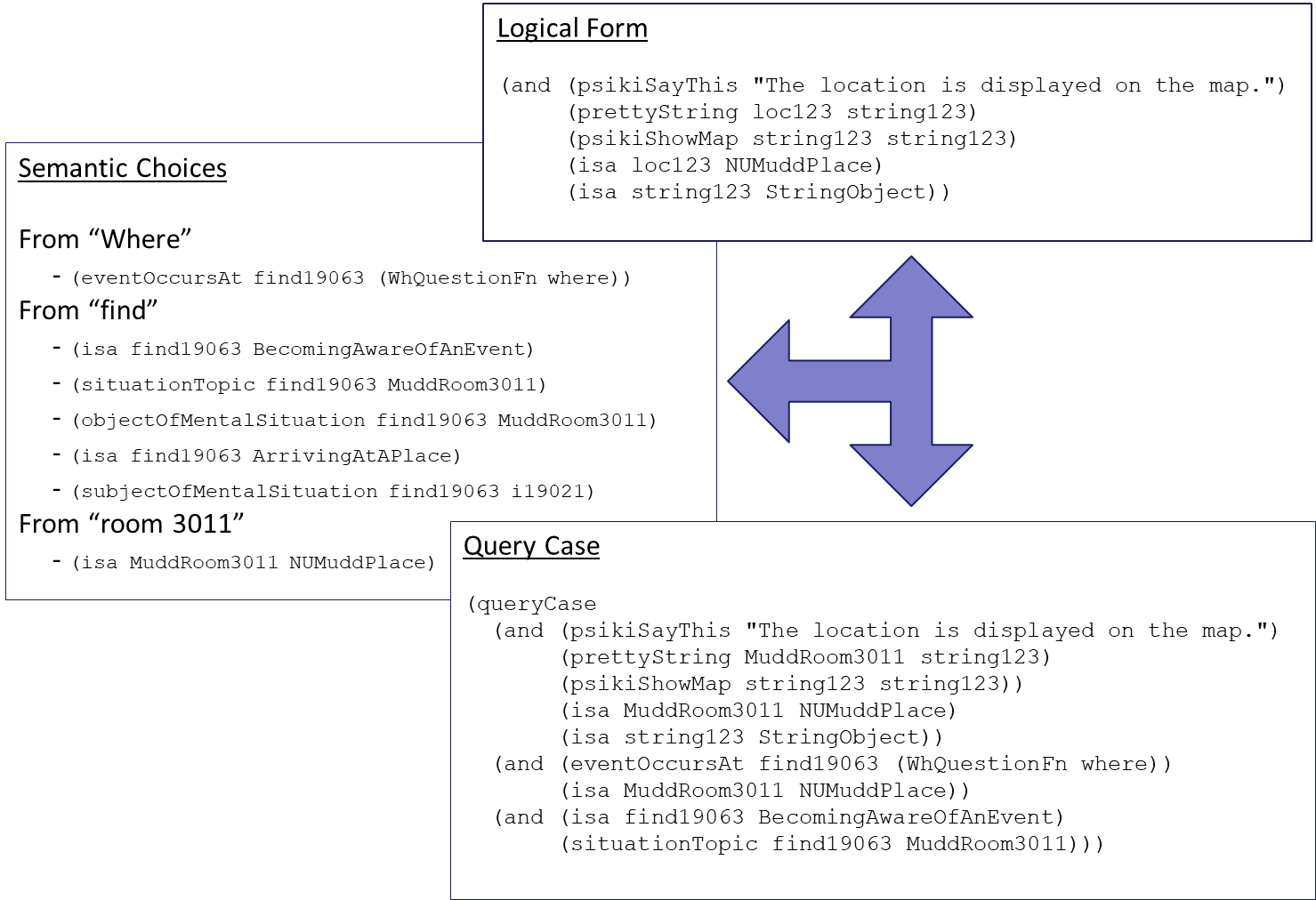

Here is an example of a query case constructed for a where question:

Analogical Q/A training is very data-efficient and efficient in computation time as well. The default training set for the Kiosk uses on average 11 question/answer pairs per question type. Training from scratch requires only five minutes on a Surface Pro tablet.

In order to provide help to a broader range of people, an online version of the Kiosk is also being built, which will communicate with students, faculty, and staff via Microsoft Teams. This will also support experimentation with Companions building up models over time of the people it interacts with, since Teams provides authentication.

Relevant Paper

- Wilson, J., Chen, K., Crouse, M., C. Nakos, C., Ribeiro, D., Rabkina, I., Forbus, K. D. (2019). Analogical Question Answering in a Multimodal Information Kiosk. In Proceedings of the Seventh Annual Conference on Advances in Cognitive Systems. Cambridge, MA.

Relevant Projects

Reasoning for Social Autonomous Agents

Reasoning for Social Autonomous Agents ![]()