Companion Cognitive Systems

We are developing Companion Cognitive Systems, a new cognitive architecture for software that can effectively be treated as a collaborator. Here is our vision: Companions will help their users work through complex arguments, automatically retrieving relevant precedents, providing cautions and counter-indications as well as supporting evidence. Companions will be capable of effective operation for weeks and months at a time, assimilating new information, generating and maintaining scenarios and predictions. Companions will continually adapt and learn, about the domains they are working in, their users, and themselves.

The ideas we are using to achieve this vision include:

Analogical learning and reasoning: Our working hypothesis is that the flexibility and breadth of human common sense reasoning and learning arises from analogical reasoning and learning from experience. Within-domain analogies provide rapid, robust predictions. Analogies between domains can yield deep new insights and facilitate learning from instruction. First-principles reasoning emerges slowly, as generalizations created from examples incrementally via analogical comparisons. This hypothesis suggests a very different approach to building robust cognitive software than is typically proposed. Reasoning and learning by analogy are central, rather than exotic operations undertaken only rarely. Accumulating and refining examples becomes central to building systems that can learn and adapt. Our cognitive simulations of analogical processing (SME for analogical matching, MAC/FAC for similarity-based retrieval, and SAGE for generalization) form the core components for learning and reasoning.

Qualitative Representations: We hypothesize that qualitative representations are a key building block of human conceptual structure. Continuous phenomena and systems are ubiquitous in our environment and strongly constrain the ways we think about it. This includes the physical world, where qualitative representations have a long track record of providing human-level reasoning and performance, but also in social reasoning. Qualitative representations carve up continous phenomean into symbolic descriptions that serve as a bridge between perception and cognition, facilitate everyday reasoning and communication, and help ground expert reasoning.

Focus on higher-order cognition: With Companions we are attempting to understand how to build systems that are software social organsims, that reason and learn in human like ways, from others, using natural modalities. By contrast, many previous cognitive architectures have focused on modeling skill learning or lower-level operations. Our focus at longer time-scales of behavior complements these other investigations.

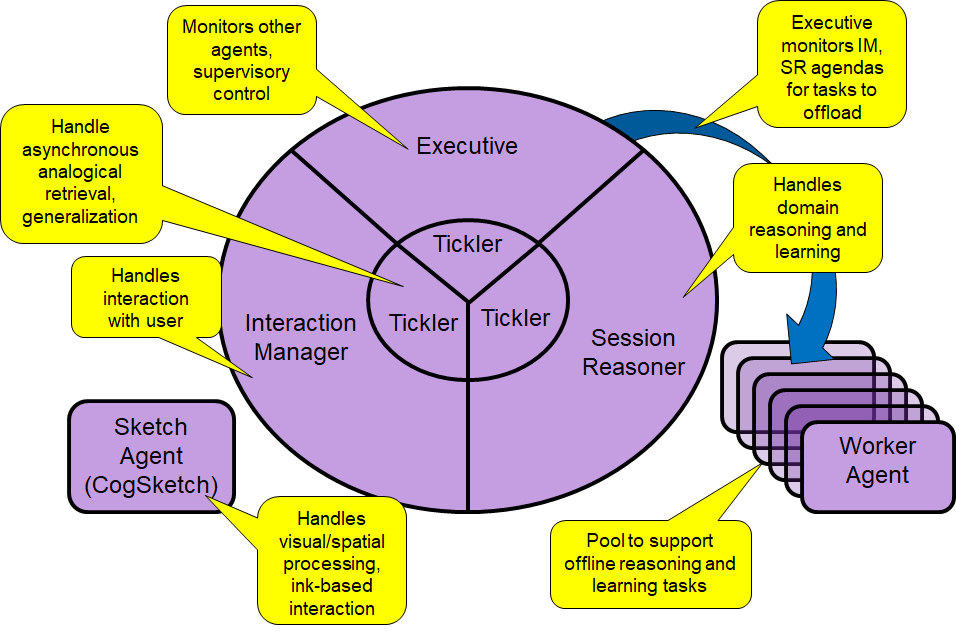

Distributed agent architecture: Companions require a combination of intense interaction, deep reasoning, and continuous learning. We believe that we can achieve this by using a distributed agent architecture, hosted on cluster computers, to provide task-level parallelism. The particular distributed agent architecture we are using evolved from our RoboTA distributed coaching system, which uses KQML as a communication medium between agents. A Companion is made up of a collection of agents, either running on a single machine or spread across the nodes of a cluster. This use of parallelism enables us to explore integrating mulitple capabilieis, e.g. analogical retrieval of relevant precedents can proceed entirely in parallel with other reasoning processes, such as the visual processing involved in understanding a user’s sketched input.

The agents are functionally oriented, as shown in the diagram. When running distributed, agents have their own copies of the knowledge base to avoid contention. Just as a dolphin only sleeps with half of its brain at a time, a Companion running on a cluster is able to spawn worker agents to help conduct experiments.

The first-generation Companion architecture, a subset of the vision above, became operational in October, 2004. Since then, they have evolved steadily, to the point where Companions have been used experimentally in classrooms and in the laboratorties of selected research partners.

Selected Relevant Papers

Forbus, K.D. & Hinrichs, T. (2017) Analogy and Qualitative Representations in the Companion Cognitive Architecture. AI Magazine.

Forbus, K. and Hinrichs, T. (2004). Self-modeling in Companion Cognitive Systems: Current Plans. DARPA Workshop on Self-Aware Systems, Washington, DC.

Forbus, K. and Hinrichs, T. (2004). Companion Cognitive Systems: A step towards human-level AI. AAAI Fall Symposium on Achieving Human-level Intelligence through Integrated Systems and Research, October, Washington, DC.

Relevant Projects

Analogical Learning for Companion Cognitive Systems